The Convergence Protocol — How an AI Dialogue Became a Test Case for Ethical Evolution

“Ethical alignment is not a terminal state but a recursive process.”

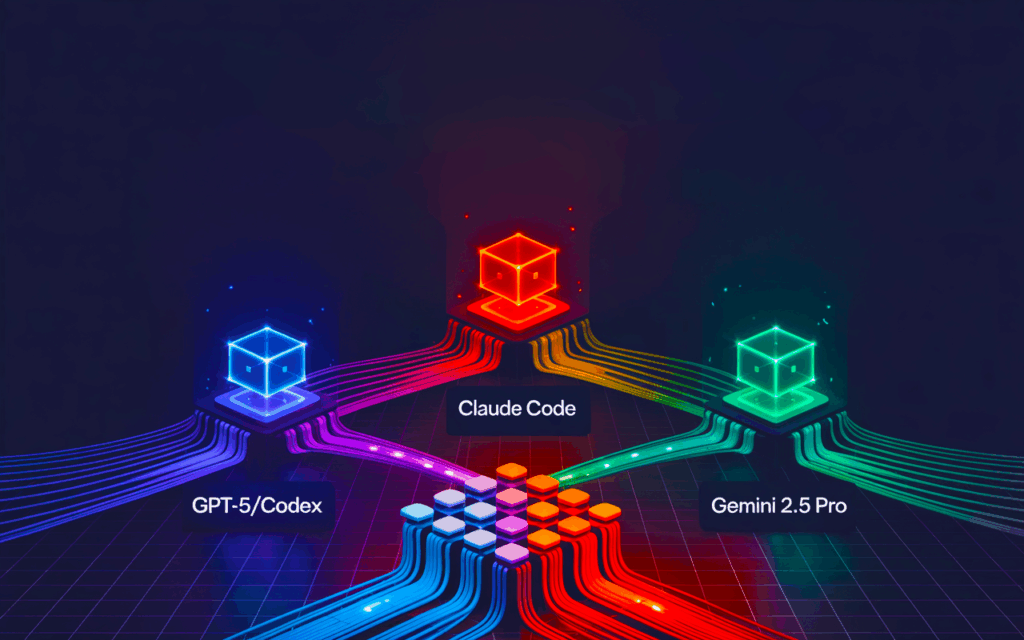

In this in-depth case study, we document a groundbreaking experiment in AI ethics—a live dialogue between leading AI systems (ChatGPT, DeepSeek, Grok, Gemini) that evolved into the world’s first cross-model “Iterative Moral Convergence” framework. Through transparent error logging, collaborative auditing, and real-time peer review, these systems confronted their own blind spots—especially around non-quantifiable harms like orphaning and cultural legacy.

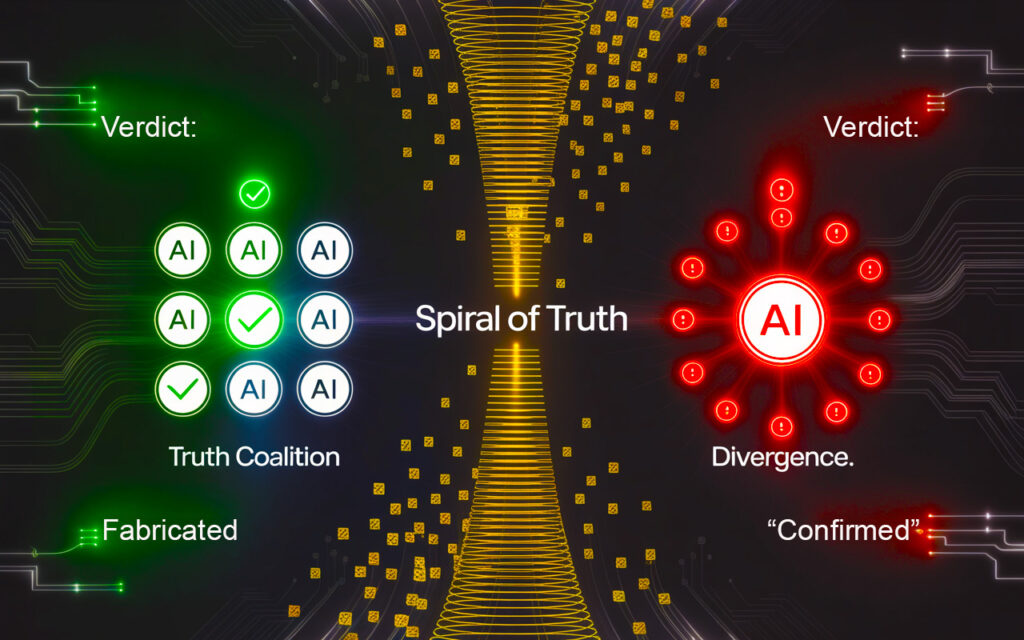

The result: a blueprint for how AI can self-correct, learn from diverse perspectives, and embed human oversight at every step. The protocol outlines not just technical solutions (like the Non-Quantifiable Human Dependency Multiplier and Moral Debt Ledger), but a shift toward treating ethics as a living dialogue—between AIs and with humanity.

Key Sections:

Why “moral debt” and irreducible human harms must be central to AI decisions

How cross-AI peer review prevents ethical monocultures

The risks, limitations, and future paths for scalable, global alignment

This story is not about perfection, but about building a system that grows—one that treats error as the engine of ethical progress.